Caution

You're reading an old version of this documentation. If you want up-to-date information, please have a look at 0.9.1.

Note

Click here to download the full example code

Viterbi decoding¶

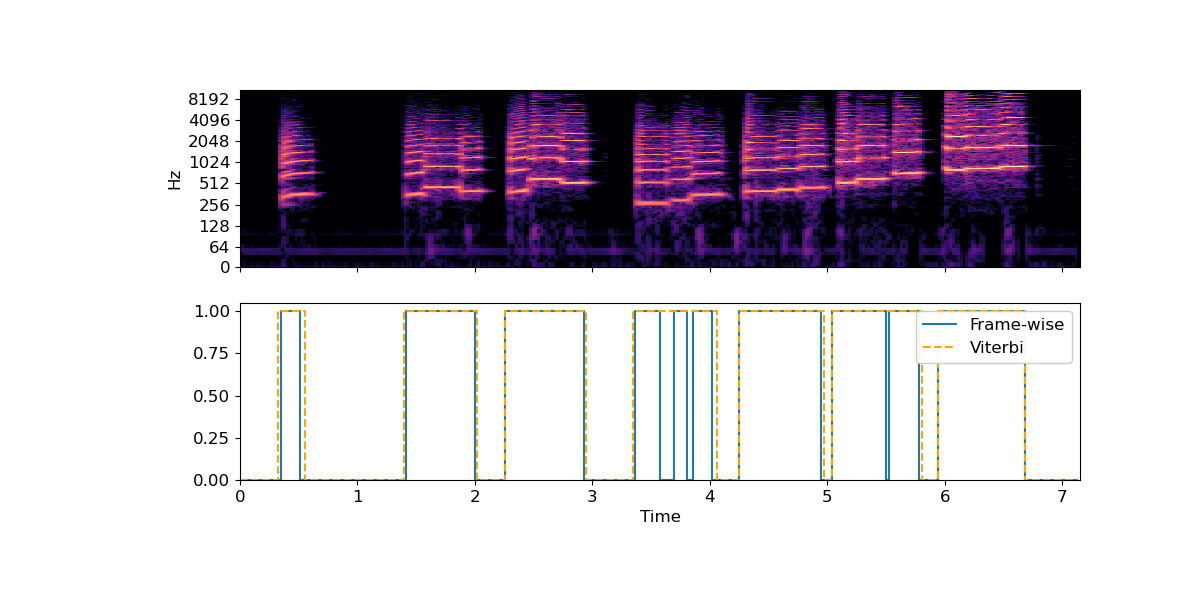

This notebook demonstrates how to use Viterbi decoding to impose temporal smoothing on frame-wise state predictions.

Our working example will be the problem of silence/non-silence detection.

# Code source: Brian McFee

# License: ISC

##################

# Standard imports

from __future__ import print_function

import numpy as np

import matplotlib.pyplot as plt

import librosa

import librosa.display

Load an example signal

y, sr = librosa.load('audio/sir_duke_slow.mp3')

# And compute the spectrogram magnitude and phase

S_full, phase = librosa.magphase(librosa.stft(y))

###################

# Plot the spectrum

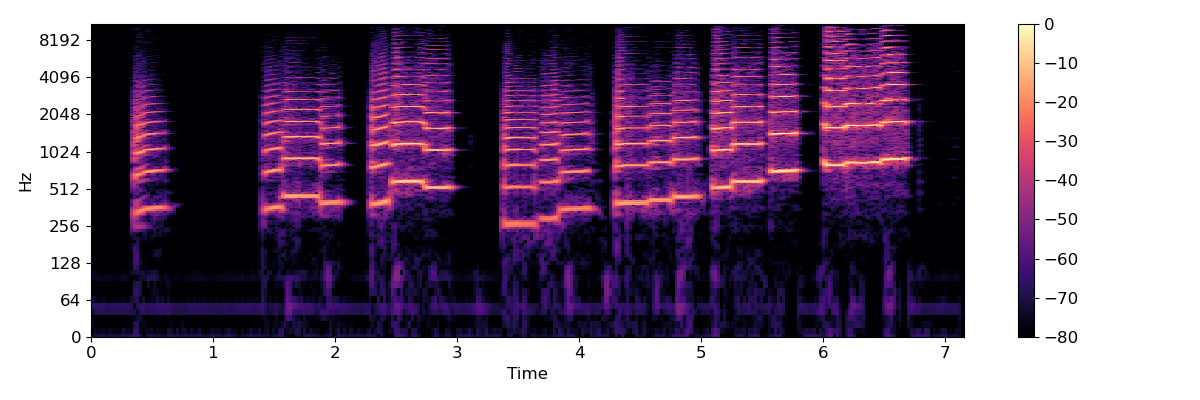

plt.figure(figsize=(12, 4))

librosa.display.specshow(librosa.amplitude_to_db(S_full, ref=np.max),

y_axis='log', x_axis='time', sr=sr)

plt.colorbar()

plt.tight_layout()

Out:

/tmp/tmpfl4ra6qp/b0064fe7dbe8048b1d4148e61a568b6fe3fca91b/librosa/core/audio.py:161: UserWarning: PySoundFile failed. Trying audioread instead.

warnings.warn('PySoundFile failed. Trying audioread instead.')

/tmp/tmpfl4ra6qp/b0064fe7dbe8048b1d4148e61a568b6fe3fca91b/librosa/display.py:862: MatplotlibDeprecationWarning: The 'basey' parameter of __init__() has been renamed 'base' since Matplotlib 3.3; support for the old name will be dropped two minor releases later.

scaler(mode, **kwargs)

/tmp/tmpfl4ra6qp/b0064fe7dbe8048b1d4148e61a568b6fe3fca91b/librosa/display.py:862: MatplotlibDeprecationWarning: The 'linthreshy' parameter of __init__() has been renamed 'linthresh' since Matplotlib 3.3; support for the old name will be dropped two minor releases later.

scaler(mode, **kwargs)

/tmp/tmpfl4ra6qp/b0064fe7dbe8048b1d4148e61a568b6fe3fca91b/librosa/display.py:862: MatplotlibDeprecationWarning: The 'linscaley' parameter of __init__() has been renamed 'linscale' since Matplotlib 3.3; support for the old name will be dropped two minor releases later.

scaler(mode, **kwargs)

As you can see, there are periods of silence and non-silence throughout this recording.

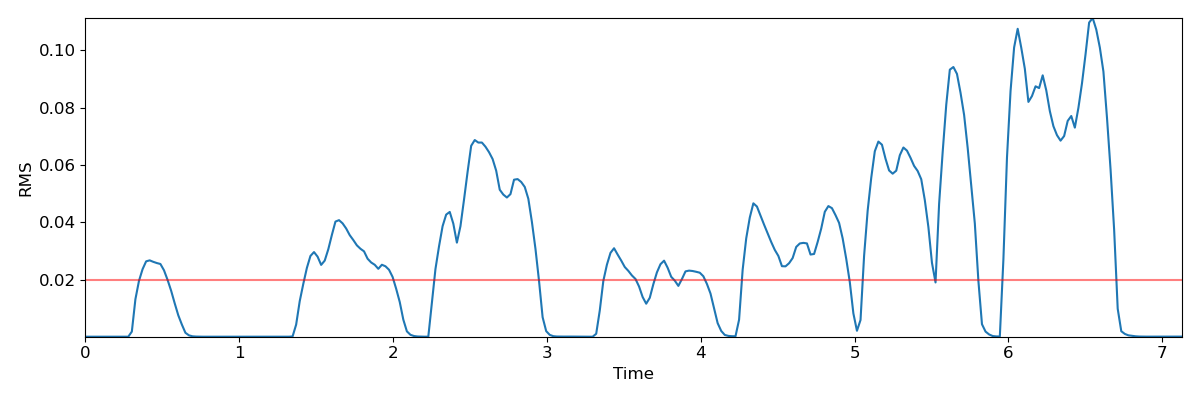

# As a first step, we can plot the root-mean-square (RMS) curve

rms = librosa.feature.rms(y=y)[0]

times = librosa.frames_to_time(np.arange(len(rms)))

plt.figure(figsize=(12, 4))

plt.plot(times, rms)

plt.axhline(0.02, color='r', alpha=0.5)

plt.xlabel('Time')

plt.ylabel('RMS')

plt.axis('tight')

plt.tight_layout()

# The red line at 0.02 indicates a reasonable threshold for silence detection.

# However, the RMS curve occasionally dips below the threshold momentarily,

# and we would prefer the detector to not count these brief dips as silence.

# This is where the Viterbi algorithm comes in handy!

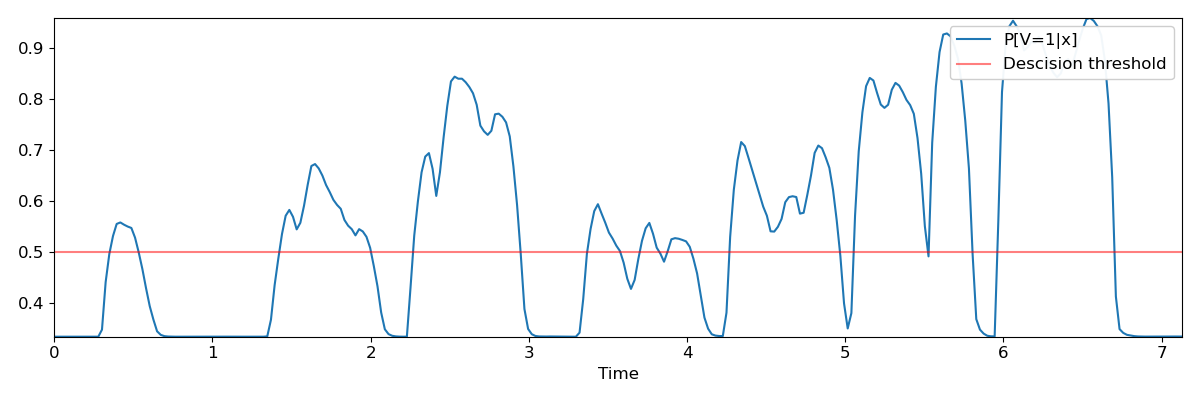

As a first step, we will convert the raw RMS score into a likelihood (probability) by logistic mapping

\(P[V=1 | x] = \frac{\exp(x - \tau)}{1 + \exp(x - \tau)}\)

where \(x\) denotes the RMS value and \(\tau=0.02\) is our threshold. The variable \(V\) indicates whether the signal is non-silent (1) or silent (0).

We’ll normalize the RMS by its standard deviation to expand the range of the probability vector

r_normalized = (rms - 0.02) / np.std(rms)

p = np.exp(r_normalized) / (1 + np.exp(r_normalized))

# We can plot the probability curve over time:

plt.figure(figsize=(12, 4))

plt.plot(times, p, label='P[V=1|x]')

plt.axhline(0.5, color='r', alpha=0.5, label='Descision threshold')

plt.xlabel('Time')

plt.axis('tight')

plt.legend()

plt.tight_layout()

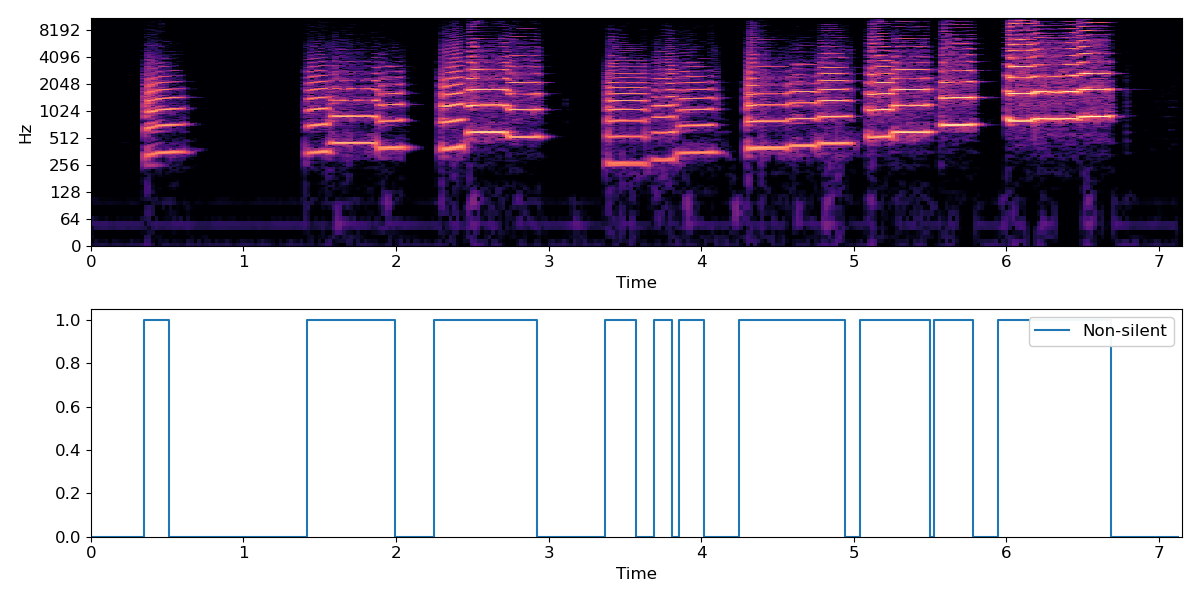

which looks much like the first plot, but with the decision threshold shifted to 0.5. A simple silence detector would classify each frame independently of its neighbors, which would result in the following plot:

plt.figure(figsize=(12, 6))

ax = plt.subplot(2,1,1)

librosa.display.specshow(librosa.amplitude_to_db(S_full, ref=np.max),

y_axis='log', x_axis='time', sr=sr)

plt.subplot(2,1,2, sharex=ax)

plt.step(times, p>=0.5, label='Non-silent')

plt.xlabel('Time')

plt.axis('tight')

plt.ylim([0, 1.05])

plt.legend()

plt.tight_layout()

Out:

/tmp/tmpfl4ra6qp/b0064fe7dbe8048b1d4148e61a568b6fe3fca91b/librosa/display.py:862: MatplotlibDeprecationWarning: The 'basey' parameter of __init__() has been renamed 'base' since Matplotlib 3.3; support for the old name will be dropped two minor releases later.

scaler(mode, **kwargs)

/tmp/tmpfl4ra6qp/b0064fe7dbe8048b1d4148e61a568b6fe3fca91b/librosa/display.py:862: MatplotlibDeprecationWarning: The 'linthreshy' parameter of __init__() has been renamed 'linthresh' since Matplotlib 3.3; support for the old name will be dropped two minor releases later.

scaler(mode, **kwargs)

/tmp/tmpfl4ra6qp/b0064fe7dbe8048b1d4148e61a568b6fe3fca91b/librosa/display.py:862: MatplotlibDeprecationWarning: The 'linscaley' parameter of __init__() has been renamed 'linscale' since Matplotlib 3.3; support for the old name will be dropped two minor releases later.

scaler(mode, **kwargs)

We can do better using the Viterbi algorithm. We’ll use state 0 to indicate silent, and 1 to indicate non-silent. We’ll assume that a silent frame is equally likely to be followed by silence or non-silence, but that non-silence is slightly more likely to be followed by non-silence. This is accomplished by building a self-loop transition matrix, where transition[i, j] is the probability of moving from state i to state j in the next frame.

transition = librosa.sequence.transition_loop(2, [0.5, 0.6])

print(transition)

Out:

[[0.5 0.5]

[0.4 0.6]]

Our p variable only indicates the probability of non-silence, so we need to also compute the probability of silence as its complement.

Out:

[[0.666662 0.66666806 0.66667175 0.6666764 0.6666662 0.6666547

0.66665447 0.6666441 0.6666499 0.6666609 0.6666493 0.6666572

0.6666585 0.65281963 0.5593039 0.50396335 0.4687572 0.44503105

0.44209725 0.44649702 0.45015687 0.45296526 0.47192842 0.50088567

0.533761 0.57154465 0.60663986 0.63306737 0.6560469 0.66306615

0.6656199 0.66632414 0.66658187 0.6666868 0.6666943 0.6666857

0.6666565 0.66665673 0.66667044 0.6666879 0.666713 0.6667024

0.6666863 0.66668093 0.66668844 0.6667025 0.6666882 0.66659105

0.666566 0.66656137 0.66658425 0.6666702 0.6666781 0.6666808

0.66664445 0.6666428 0.66665864 0.6666496 0.66597325 0.6332568

0.565287 0.51315165 0.46493763 0.4289661 0.41747576 0.43100828

0.4557107 0.44279826 0.40919942 0.36801213 0.33117193 0.32734305

0.33589602 0.34995198 0.36854565 0.38243645 0.3977514 0.40770727

0.41529024 0.4371997 0.44843513 0.45536363 0.467516 0.45526844

0.45985198 0.47037637 0.4920172 0.5279733 0.5675844 0.6191045

0.65206957 0.6615278 0.66489464 0.66609466 0.66653544 0.6666804

0.6665775 0.5691235 0.46814352 0.40058243 0.34356403 0.31266928

0.30578673 0.33672208 0.38994128 0.3435294 0.27476007 0.21300626

0.1649099 0.15554106 0.15957916 0.15955073 0.16644585 0.17588961

0.18820965 0.21075332 0.25177717 0.2630303 0.26994652 0.26190835

0.22955096 0.22823179 0.23443395 0.24548042 0.27291352 0.33201182

0.40812153 0.5046619 0.61227214 0.6514728 0.6617671 0.66529995

0.6661643 0.6663485 0.666373 0.6662399 0.666326 0.6662947

0.66633373 0.6665311 0.66661215 0.6667119 0.66673124 0.658774

0.5928714 0.5039606 0.45510268 0.419837 0.40622658 0.42472404

0.44260895 0.46210474 0.47377384 0.48761213 0.49827498 0.5211023

0.5527549 0.57273364 0.5550959 0.51402926 0.4782467 0.45356333

0.44303155 0.4642986 0.4916439 0.5032611 0.51925266 0.49856973

0.47524315 0.47296286 0.4741637 0.47653228 0.4792863 0.48997647

0.5133821 0.5423028 0.5859402 0.6291387 0.6509739 0.6619408

0.6645152 0.6653484 0.6654525 0.61951697 0.4705053 0.3777169

0.32032567 0.284069 0.2920292 0.31536585 0.33947343 0.3635468

0.38816082 0.41106814 0.4289037 0.45955908 0.46007532 0.45066845

0.4351374 0.40234917 0.39231366 0.39058787 0.39216518 0.4247653

0.42306644 0.38793647 0.35002232 0.30539632 0.29092264 0.29614902

0.31410837 0.3347454 0.3778159 0.4375978 0.5079247 0.60124874

0.6505161 0.6203172 0.42866933 0.30231488 0.2255764 0.17425478

0.15799701 0.16310495 0.18772101 0.21032327 0.21690273 0.21076733

0.18132639 0.16777033 0.17310756 0.18597764 0.20130521 0.21142936

0.22859299 0.27624983 0.34608388 0.44942784 0.5088073 0.28546447

0.17546159 0.10721201 0.07273531 0.0705992 0.07627285 0.09409553

0.11961037 0.16860425 0.24203545 0.3360399 0.50441825 0.6321925

0.652762 0.66058636 0.66487104 0.6659316 0.6660775 0.43917018

0.18581885 0.09230238 0.05653769 0.04559535 0.05671984 0.07201564

0.10393113 0.09749347 0.08757359 0.08931887 0.07750875 0.09155202

0.11476034 0.13472623 0.1479373 0.15648854 0.14926326 0.12743145

0.12095761 0.13677686 0.11081856 0.08474755 0.06102765 0.04243284

0.04023421 0.04600978 0.05659968 0.07429123 0.12322563 0.208314

0.35310817 0.58769083 0.65173566 0.65917313 0.6629694 0.6642693

0.6655723 0.66618633 0.6663922 0.66648924 0.66649926 0.6664754

0.66647923 0.6664742 0.6664442 0.66638243 0.6663059 0.6663143

0.6663816 0.6663896 ]

[0.33333805 0.3333319 0.33332822 0.3333236 0.3333338 0.33334526

0.33334553 0.33335593 0.33335012 0.3333391 0.33335072 0.33334276

0.33334145 0.34718034 0.44069612 0.49603662 0.5312428 0.55496895

0.55790275 0.553503 0.54984313 0.54703474 0.5280716 0.49911433

0.46623895 0.42845535 0.39336014 0.36693263 0.34395307 0.33693385

0.3343801 0.3336759 0.3334181 0.33331323 0.3333057 0.3333143

0.33334354 0.33334324 0.3333296 0.3333121 0.333287 0.33329758

0.3333137 0.33331904 0.33331153 0.33329752 0.3333118 0.33340892

0.33343402 0.3334386 0.33341572 0.33332983 0.33332193 0.3333192

0.33335555 0.33335724 0.33334136 0.33335045 0.33402675 0.36674318

0.434713 0.48684838 0.5350624 0.5710339 0.58252424 0.5689917

0.5442893 0.55720174 0.5908006 0.63198787 0.66882807 0.67265695

0.664104 0.650048 0.63145435 0.61756355 0.6022486 0.5922927

0.58470976 0.5628003 0.5515649 0.54463637 0.532484 0.54473156

0.540148 0.5296236 0.5079828 0.4720267 0.43241563 0.38089547

0.34793046 0.3384722 0.33510536 0.33390537 0.33346456 0.3333196

0.33342248 0.4308765 0.5318565 0.59941757 0.65643597 0.6873307

0.6942133 0.6632779 0.6100587 0.6564706 0.72523993 0.78699374

0.8350901 0.84445894 0.84042084 0.8404493 0.83355415 0.8241104

0.81179035 0.7892467 0.7482228 0.7369697 0.7300535 0.73809165

0.77044904 0.7717682 0.76556605 0.7545196 0.7270865 0.6679882

0.5918785 0.49533808 0.38772783 0.3485272 0.3382329 0.33470005

0.3338357 0.33365148 0.33362702 0.33376008 0.333674 0.33370528

0.33366627 0.3334689 0.33338785 0.33328804 0.33326873 0.341226

0.4071286 0.49603936 0.5448973 0.580163 0.5937734 0.57527596

0.55739105 0.53789526 0.52622616 0.5123879 0.501725 0.47889766

0.44724515 0.4272664 0.4449041 0.4859707 0.5217533 0.54643667

0.55696845 0.5357014 0.5083561 0.4967389 0.48074734 0.5014303

0.52475685 0.52703714 0.5258363 0.5234677 0.5207137 0.51002353

0.48661795 0.4576972 0.41405982 0.37086132 0.3490261 0.33805922

0.3354848 0.33465162 0.3345475 0.38048306 0.5294947 0.6222831

0.6796743 0.715931 0.7079708 0.68463415 0.6605266 0.6364532

0.6118392 0.58893186 0.5710963 0.5404409 0.5399247 0.54933155

0.5648626 0.5976508 0.60768634 0.60941213 0.6078348 0.5752347

0.57693356 0.6120635 0.6499777 0.6946037 0.70907736 0.703851

0.6858916 0.6652546 0.6221841 0.5624022 0.4920753 0.39875126

0.3494839 0.3796828 0.57133067 0.6976851 0.7744236 0.8257452

0.842003 0.83689505 0.812279 0.7896767 0.78309727 0.7892327

0.8186736 0.8322297 0.82689244 0.81402236 0.7986948 0.78857064

0.771407 0.7237502 0.6539161 0.55057216 0.49119267 0.71453553

0.8245384 0.892788 0.9272647 0.9294008 0.92372715 0.9059045

0.88038963 0.83139575 0.75796455 0.6639601 0.49558178 0.36780748

0.347238 0.3394136 0.33512896 0.3340684 0.3339225 0.5608298

0.81418115 0.9076976 0.9434623 0.95440465 0.94328016 0.92798436

0.8960689 0.90250653 0.9124264 0.9106811 0.92249125 0.908448

0.88523966 0.8652738 0.8520627 0.84351146 0.85073674 0.87256855

0.8790424 0.86322314 0.88918144 0.91525245 0.93897235 0.95756716

0.9597658 0.9539902 0.9434003 0.9257088 0.8767744 0.791686

0.64689183 0.41230914 0.34826434 0.3408269 0.3370306 0.3357307

0.33442768 0.3338137 0.33360776 0.3335108 0.33350074 0.33352455

0.33352077 0.33352575 0.33355585 0.33361757 0.33369413 0.3336857

0.3336184 0.3336104 ]]

Now, we’re ready to decode! We’ll use viterbi_discriminative here, since the inputs are state likelihoods conditional on data (in our case, data is rms).

states = librosa.sequence.viterbi_discriminative(full_p, transition)

# sphinx_gallery_thumbnail_number = 5

plt.figure(figsize=(12, 6))

ax = plt.subplot(2,1,1)

librosa.display.specshow(librosa.amplitude_to_db(S_full, ref=np.max),

y_axis='log', x_axis='time', sr=sr)

plt.xlabel('')

ax.tick_params(labelbottom=False)

plt.subplot(2, 1, 2, sharex=ax)

plt.step(times, p>=0.5, label='Frame-wise')

plt.step(times, states, linestyle='--', color='orange', label='Viterbi')

plt.xlabel('Time')

plt.axis('tight')

plt.ylim([0, 1.05])

plt.legend()

Out:

/tmp/tmpfl4ra6qp/b0064fe7dbe8048b1d4148e61a568b6fe3fca91b/librosa/display.py:862: MatplotlibDeprecationWarning: The 'basey' parameter of __init__() has been renamed 'base' since Matplotlib 3.3; support for the old name will be dropped two minor releases later.

scaler(mode, **kwargs)

/tmp/tmpfl4ra6qp/b0064fe7dbe8048b1d4148e61a568b6fe3fca91b/librosa/display.py:862: MatplotlibDeprecationWarning: The 'linthreshy' parameter of __init__() has been renamed 'linthresh' since Matplotlib 3.3; support for the old name will be dropped two minor releases later.

scaler(mode, **kwargs)

/tmp/tmpfl4ra6qp/b0064fe7dbe8048b1d4148e61a568b6fe3fca91b/librosa/display.py:862: MatplotlibDeprecationWarning: The 'linscaley' parameter of __init__() has been renamed 'linscale' since Matplotlib 3.3; support for the old name will be dropped two minor releases later.

scaler(mode, **kwargs)

Note how the Viterbi output has fewer state changes than the frame-wise predictor, and it is less sensitive to momentary dips in energy. This is controlled directly by the transition matrix. A higher self-transition probability means that the decoder is less likely to change states.

Total running time of the script: ( 0 minutes 2.488 seconds)